The Data Migration Process in ERP: A Step-by-Step Odoo Implementation Guide

Published on January 20th 2026

Introduction

As a business owner, you can have the best ERP installed in your systems. However, if your data is broken, your decisions will be too.

Every number you trust, every report you act on, starts with one thing: migration done right.

ERP data migration simply means moving information: customers, vendors, invoices, stock, and history from old systems into Odoo. While it appears straightforward, the underlying data structure makes migration one of the most failure-prone phases of ERP projects.

The moment you analyze the workflow, you find duplicates, empty fields, and years of mismatched codes that no one has cleaned in a decade.

Too many teams see migration as a “technical transfer,” which is one of the most common reasons ERP implementations fail during critical stages of ERP implementation.

In reality, it’s a strategic rebuild. You’re not just copying files; you’re restructuring the way your business stores, connects, and interprets information inside Odoo.”

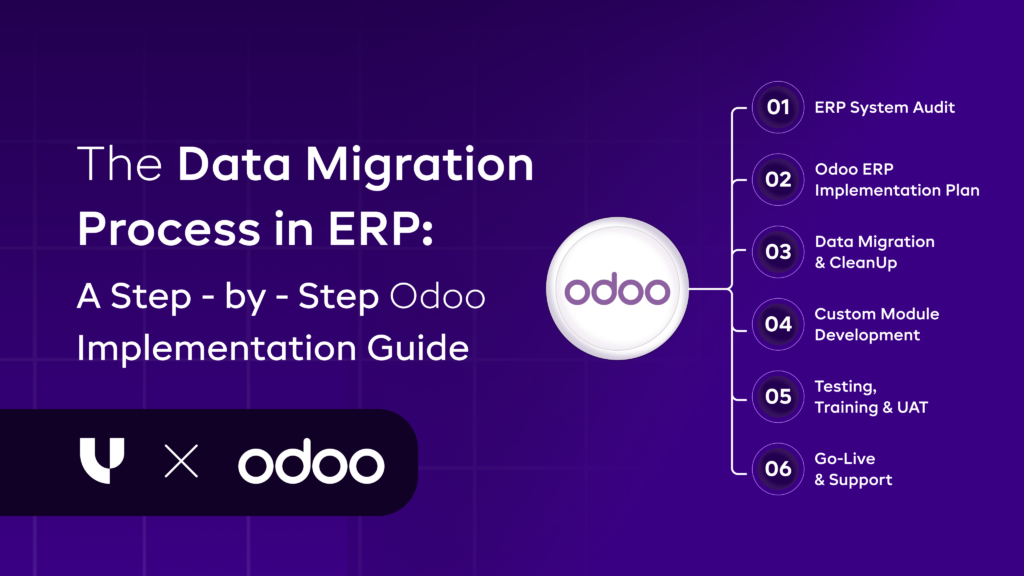

ERP Data Migration Process: A Step-by-Step Guide

Here is a clear, step-by-step view of how data moves from legacy systems to your new ERP, covering preparation, validation, and post-migration checks to ensure accuracy, continuity, and minimal business disruption.

Step 1: Data Assessment & Audit - Defining What the Business Actually Needs

Every ERP migration begins with a basic but often overlooked question:

What data does the business actually need to operate and make decisions today?

Over time, legacy systems accumulate large volumes of data, some useful, some outdated, and some kept only because no one ever reviewed it. Migrating everything may feel safe, but it usually creates more problems than it solves. It increases migration effort, slows testing, and makes the new ERP harder to use from day one.

This step exists to separate business-critical data from data that only adds noise.

What this step focuses on

Data assessment is not a technical scan of databases. It is a business-led review of how data is used across finance, operations, sales, and compliance.

At this stage, teams look at:

- Which data is required to run daily operations immediately after go-live

- Which data is required for statutory, audit, or regulatory purposes

- Which data exists only because it was never cleaned or reviewed

- Which reports depend on this data today

These discussions often surface inconsistencies in how different teams view the same data, making this step critical for alignment.

How the assessment is carried out

Data is reviewed in broad categories because each category serves a different purpose.

- Operational data such as customers, vendors, items, inventory balances, and open transactions is checked for current usage. Only records that actively support ongoing operations are shortlisted for migration.

- Financial and statutory data is reviewed to determine what must be available inside the ERP versus what can be archived and accessed separately for audits or historical reference.

- Legacy and reference data, inactive customers, obsolete items, and one-time vendors are examined closely. This is where most unnecessary data is usually found.

Legacy and reference data, inactive customers, obsolete items, and one-time vendors are examined closely. This is where most unnecessary data is usually found.

A common example from ERP projects

In many mid-sized businesses, customer and item masters grow unchecked for years.

During one data audit:

- A large portion of customers had no transactions in the last three years

- Thousands of items were no longer sold or stocked

- Multiple versions of the same vendor existed due to inconsistent naming

Migrating all of this data would have made the ERP harder to navigate and increased confusion for users. Limiting migration to relevant records resulted in cleaner reports and faster adoption.

What the business gains from doing this well

When data assessment and audit are done properly:

- Migration scope becomes smaller and more manageable

- Testing becomes faster and more reliable

- Reports in the new ERP are clearer and more relevant

- Users trust the data sooner, which improves adoption

Step 2: Data Extraction - Pulling Data Without Breaking Daily Operations

Once the relevant data is identified in the audit stage, the next challenge is getting it out of existing systems safely and completely. Data extraction is not just about exporting files; it is about ensuring that critical business data is pulled accurately, consistently, and without disrupting ongoing operations.

Many ERP migrations face early setbacks here because extraction is treated as a technical task rather than a business-critical activity.

Why this step matters

Operational systems are usually still active when extraction begins. Sales orders are being created, invoices are being posted, and inventory levels are changing daily. Poor extraction planning can result in:

- Missing transactions

- Partial historical records

- Mismatched balances between old and new systems

This creates trust issues even before the new ERP goes live.

What actually happens during data extraction

Data is pulled from multiple sources, not just one system. These often include:

- ERP or accounting software

- CRM tools

- Inventory or warehouse systems

- Spreadsheets maintained outside core systems

Each source may store data in different formats and update cycles. The goal is to extract complete and consistent datasets that reflect a clear business snapshot.

For example, financial data usually needs to be extracted up to a specific cut-off date, while master data like customers or products may need the latest version.

How extraction is typically approached

Extraction is usually done in controlled phases:

- Static data extraction for master data that does not change frequently

- Transactional data extraction aligned with defined cut-off periods

- Incremental extracts to capture changes made after the initial pull

This phased approach reduces risk and avoids operational downtime.

Common challenges seen during extraction

Some practical issues that often surface:

- Data stored in custom fields with no documentation

- Inconsistent date formats and currencies

- Historical data locked in legacy systems with limited access

- Manual spreadsheets that differ from system records

What a successful extraction achieves

When done correctly, this step ensures:

- No loss of critical historical or transactional data

- Clean handoff to the cleansing and transformation stages

- Alignment between operational reality and extracted records

Most importantly, it creates confidence that the new ERP is being built on complete and trustworthy data, not assumptions.

Step 3: Data Cleansing & Standardization – Fixing Issues Before They Enter the ERP

After extraction, the data often contains inconsistencies, duplicates, and missing information. Legacy systems tolerate these problems, but ERPs do not, especially when implemented through structured Odoo implementation services that rely on clean master data. Cleansing and standardization ensure that only accurate, uniform, and complete data enter the new system.

Why this step matters

Poor-quality data entering an ERP leads to immediate problems:

- Financial reports that don’t match reality

- Inventory records that don’t reconcile

- Users losing trust in the system

By cleansing data before migration, businesses avoid costly corrections after go-live and improve user adoption.

How cleansing and standardization are done

- Duplicate removal: Identify repeated customers, vendors, or items and merge them into a single record.

- Consistency enforcement: Standardize units of measure, currencies, product codes, and naming conventions.

- Completeness checks: Ensure mandatory ERP fields are filled correctly.

- Validation rules: Apply business rules to detect outliers, invalid values, or data that does not comply with ERP standards.

Example: A company had “KG,” “KGS,” and “Kilogram” across its product database. Without standardization, inventory valuation would have been inconsistent, causing financial reporting errors.

What this step achieves

- Accurate reports from day one

- Reduced user confusion

- Lower support effort post go-live

Step 4: Data Mapping & Transformation – Aligning Legacy Data With ERP Structure

Legacy data reflects old processes. ERP requires structured, standardized data to support optimized workflows. Directly importing data without mapping causes:

- Broken workflows

- Misaligned reports

- User frustration

Mapping ensures the ERP functions according to current and future business needs.

What happens during this step

- Field mapping: Decide how each legacy field maps to ERP fields.

- Data transformation: Merge, split, or reformat data to fit ERP requirements.

- Relationship alignment: Maintain links between customers, orders, invoices, and payments.

- Business logic adjustment: Replace legacy workarounds with ERP-standard workflows.

Example: A single “order status” in the old system may need multiple ERP statuses for fulfillment, billing, and shipping.

Key mapping considerations

| Legacy Field | ERP Field | Transformation Needed |

|---|---|---|

| Order Status | Fulfillment, Billing, Shipping Status | Split into multiple fields |

| Customer Type | Customer Class & Pricing Rules | Map to separate fields |

| Inventory Unit | ERP Unit & Category | Standardize units |

Outcome:

- Functional ERP workflows

- Accurate reporting

- Reduced manual corrections post go-live

Step 5: Test Migration (Pilot Load) - Validating With Real Data

Even with careful assessment, extraction, cleansing, and mapping, real data often behaves differently in the ERP than expected.

Pilot migration is essentially a “dress rehearsal” that exposes hidden issues before the full go-live:

- Broken links between transactions and master data

- Discrepancies in financial balances, inventory valuation, or sales data

- Errors in custom fields, workflows, or business logic

- Misalignment in reporting outputs

Skipping this step is one of the top reasons ERP projects face major post-go-live issues.

How the pilot migration is carried out

1. Select a representative dataset

- Include key modules like finance, inventory, sales, and procurement.

- Ensure the sample contains all transaction types, active customers/vendors, and recent inventory movements.

2. Execute migration on a test environment

- Load the data using the same rules and transformations planned for full migration.

- Track any errors, warnings, or inconsistencies flagged by the ERP.

3. Validate key reports and balances

- Trial balances, AR/AP aging, open sales/purchase orders, and inventory valuation.

- Compare results against legacy system numbers.

4. Identify and resolve issues

- Adjust field mappings, transformations, or cleansing rules as required.

- Repeat pilot migration until all critical discrepancies are resolved.

Common issues uncovered in pilot migrations

| Area | Typical Problem | Pilot Fix |

|---|---|---|

| Inventory | Incorrect valuation due to rounding differences | Adjust transformation rules |

| AR/AP | Missing links to customer/vendor records | Correct field mapping |

| Finance | Trial balance mismatch | Reconcile and adjust mappings |

| Reports | Totals not matching legacy reports | Refine data transformation logic |

Outcome for the business

- Provides confidence in the accuracy of the migration plan

- Reduces risk of post-go-live surprises

- Offers a proof point for stakeholders that the ERP will reflect real operational and financial data

- Helps teams adapt workflows and reporting before full migration

Step 6: Complete Migration & Validation – Ensuring Accuracy on Day One

This is the make-or-break stage. The full migration moves all cleaned, mapped, and tested data into the live ERP environment. Mistakes here directly affect:

- Financial statements and compliance reporting

- Inventory accuracy and operational decisions

- User confidence and adoption rates

Decision-makers must treat this as a business-critical operation, not just a technical task.

How full migration is executed

1. Final preparation

- Freeze legacy system updates (cut-off date) to prevent transactional discrepancies.

- Confirm final mappings, transformation rules, and validation checks from the pilot migration.

2. Data load into ERP

- Execute the full migration using validated rules.

- Migrate master data first (customers, vendors, items), then transactional data (invoices, orders, payments).

3. Validation & reconciliation

- Validate financial totals, balances, and inventory against legacy systems.

- Review open transactions (AR, AP, sales, purchase orders) for completeness.

- Confirm master data consistency (customers, vendors, items).

4. Business sign-off

- Finance, operations, and key stakeholders review critical data.

- Any discrepancies are resolved before the ERP is officially operational.

Common challenges during full migration

| Risk | Potential Impact | Mitigation |

|---|---|---|

| Missing transactions | Operational disruption | Re-run migration for affected modules |

| Misaligned AR/AP balances | Financial reporting errors | Reconcile and adjust data |

| Inventory mismatches | Operational inefficiencies | Physical count + ERP adjustment |

| Workflow failures | User adoption issues | Validate and adjust mappings/workflows |

Outcome for the business

- ERP goes live with accurate and complete data

- Stakeholders trust operational and financial numbers

- Reduces post-go-live support tickets and manual corrections

- Enables smooth adoption and process continuity

Step 7: Post-Migration Monitoring & Optimization - Sustaining ERP Value Over Time

Even after a successful migration, the work isn’t over. ERP success depends on maintaining data quality, monitoring system usage, and optimizing workflows. Without this step:

- Small errors in data can snowball into major operational issues

- Users may create shadow systems or workarounds, reducing ERP adoption

- Reporting accuracy declines, impacting strategic decisions

This step ensures the ERP remains trusted, reliable, and scalable long after go-live.

How post-migration monitoring is done

1. Early transaction review

- Monitor initial transactions for errors or inconsistencies

- Compare ERP outputs with expected business results

2. Data governance enforcement

- Define roles and responsibilities for maintaining master data accuracy

- Regularly validate mandatory fields, duplicates, and formatting standards

3. Workflow and process optimization

- Track actual system usage against intended workflows

- Identify bottlenecks or inefficiencies

- Make iterative adjustments to improve productivity

4. KPI and reporting validation

- Ensure key financial, operational, and compliance reports are accurate

- Adjust data structures or reporting logic as needed

Common post-migration issues and corrective actions

| Issue | Potential Impact | Recommended Action |

|---|---|---|

| Mis-categorized inventory | Incorrect reporting, stock issues | Review and correct master data |

| Duplicate or missing customers/vendors | Billing or AR/AP errors | Enforce validation and cleanup rules |

| User workarounds | Reduced ERP adoption | Provide training and optimize workflows |

| Reporting discrepancies | Misguided decisions | Adjust reports and validate with stakeholders |

Outcome for the business

- Stable ERP operation: Data remains accurate and reliable

- Improved efficiency: Optimized workflows reduce manual effort

- High user confidence: Stakeholders trust the system, ensuring adoption

- Scalability: The system supports growth without recurring issues

Key Considerations Before Migrating to Odoo

Odoo is designed to support standardized processes, but many businesses have evolved workflows with custom workarounds. Migrating without reviewing current processes can replicate inefficiencies in the new system.

What to do:

- Map key workflows in finance, inventory, sales, procurement, and operations.

- Identify gaps, redundancies, or outdated processes.

- Decide which processes can be improved or simplified during migration.

Example: A distribution company realized that three separate Excel sheets tracked inventory movement. Migrating as-is would complicate Odoo workflows. Streamlining these into a single inventory process reduced complexity and improved reporting.

Define Data Scope and Quality Requirements

Migrating unnecessary or poor-quality data increases project effort and risks errors in the new system.

What to do:

- Identify master data (customers, vendors, products) and transactional data (invoices, orders, inventory).

- Decide which historical data is essential and which can be archived.

- Define data quality standards (completeness, consistency, accuracy) before migration.

Example Table: Data Scope Planning

| Data Type | Required for Odoo | Recommended Action |

|---|---|---|

| Customers | Yes | Migrate active and recent customers; archive inactive ones |

| Vendors | Yes | Include vendors used in the last 2–3 years |

| Inventory | Yes | Include current and near-future stock; exclude obsolete items |

| Historical Transactions | Partial | Only for audit or reporting purposes |

| Old Masters | No | Archive if not actively used |

Evaluate Customization Needs

Odoo offers modular features, but some businesses require specific customizations, best addressed through scalable Odoo customization services to fit their operations. Early assessment avoids surprises during migration.

What to do:

- Identify gaps between out-of-the-box Odoo modules and business requirements.

- Determine which processes need customization vs. standardization.

- Consider long-term maintenance: custom code requires ongoing updates and testing.

Example: A manufacturing firm needed a specific workflow for batch production tracking. Deciding this upfront allowed developers to design custom modules without delaying migration.

Plan for Integration with Existing Systems

Many businesses have other systems (CRM, e-commerce, HR, or legacy ERPs) that need to work with Odoo. Poorly planned integrations can disrupt operations.

What to do:

- List all systems that must connect with Odoo.

- Identify critical data flows and frequency (real-time vs. batch).

- Decide whether to use native Odoo connectors, third-party apps, or custom APIs.

Example: A retail company integrated Odoo with its e-commerce platform to sync orders and inventory in real time, preventing stockouts and manual updates.

Set Realistic Timelines and Resources

ERP migrations are complex projects. Underestimating effort can lead to rushed testing, skipped validations, or failed adoption.

What to do:

- Define clear project phases: assessment, extraction, cleansing, migration, testing, and go-live.

- Assign dedicated teams from IT, finance, operations, and leadership.

- Include buffer time for unforeseen issues.

Uncanny insight: Companies that allocate 20–30% of the total project timeline to data preparation, pilot testing, and user training achieve faster adoption and fewer post-go-live issues.

Consider Change Management and User Training

Even the best technical migration fails if users don’t adopt the system. Resistance to change is the most common post-go-live issue.

What to do:

- Communicate the benefits and changes to all stakeholders early.

- Conduct role-based training for each department.

- Identify super-users who can guide peers post-go-live.

Example: In one SME, creating a small “Odoo Champions” group reduced helpdesk tickets by 40% in the first month after go-live.

Evaluate Compliance and Security Needs

Migrating financial and operational data requires adherence to regulations like GST, VAT, or GDPR. Security gaps can lead to compliance violations and financial penalties.

What to do:

- Ensure Odoo modules comply with local taxation and reporting rules.

- Implement user access controls and audit trails.

- Plan regular data backups and disaster recovery measures.

Testing & Validation Framework: How Uncanny Ensures Accuracy

When Uncanny runs an ERP migration, testing isn’t a checkbox; it’s the backbone.

Every build goes through three layers of checks before launch: the data, the process, and how people actually use it.

Here’s a tabular presentation of how it is executed in real-world operations.

| Phase | Focus Area | What We Do | Why It Matters |

|---|---|---|---|

| Data Verification | Master & transactional records | Triple-check data fields, duplicates, and missing links | A clean, accurate database prevents errors later |

| Process Validation | Business workflows | Simulate end-to-end workflows (order → fulfillment → invoice) | Ensures live work behaves as expected |

| Functional Verification | Module interactions & user access | Test roles, permissions, and integrations (CRM ↔ ERP) | Users won’t adopt systems that lock them out or confuse access |

| Relational Integrity | Data-model relationships | Confirm links between customer, invoice, and payment | Broken links lead to reporting gaps and wrong totals |

| UI / UX Validation | System usability and user experience | Real users run common tasks and report frustrations | Adoption is driven by how smooth the tool feels |

| Performance Testing | System speed, load-handling | Measure import times, interface response, and report generation | Slow systems kill trust and productivity |

| Go-Live Readiness Review | Final sign-off from stakeholders | Business owners review reports, totals, and dashboards | Ensures launch readiness and executive confidence |

| Post-Go-Live Monitoring | Ongoing data health & governance | Set alerts, cleanup routines, and periodic audits | Keeps the ERP reliable long after migration |

Want to start a project with us?

Empowering businesses to achieve greatness through strategic guidance and innovative solutions.

Book A Demo

Uncanny’s Triple-Verification Process - Explained

1. Data Verification

This is where we begin execution. Our team checks every table, every sheet, every customer record, twice. Here, our team identifies duplicates, broken references, incorrect currencies, and outdated vendor codes.

If something doesn’t line up, we fix it here because once you go live, “just one wrong field” can throw off a whole balance sheet.

2. Process Validation

Once the data looks clean, we walk through the actual business flow. A quote becomes a sales order, becomes an invoice, becomes a payment. If anything feels off (a missing approval, a slow trigger), it’s fixed before users ever see it.

3. Functional Verification

Now comes the real-world issue: how modules talk to each other.

Finance needs numbers from Sales. Inventory needs counts from Procurement.

We test every integration and every permission level. We also focus on problems like:

- Can the warehouse user see what they need?

- Can Finance close a report without errors?

We don’t stop until every click feels smooth and predictable.

4. Relational Integrity & Reporting

You can’t just move data; you have to keep relationships intact. We test whether customer invoices still link to payments and whether stock adjustments are reflected in cost reports.

Then, totals are matched against legacy data. If yesterday’s report said $1,032, today’s must say the same. That’s how trust gets built: number by number.

5. Go-Live Readiness & Rollback

Before the big switch, we freeze data, run the full suite of checks, and test response times. Once through, it’s time to set a rollback plan. If something fails, we roll back fast and fix it before it becomes a significant problem.

6. Post-Go-Live Monitoring

Once the migration is live, review performance logs, identify null values, and clean up any anomalous records.

When the team sees stable dashboards and no numbers in; that’s when everyone is confident with the integrations.

Uncanny Note

“Testing isn’t paperwork. Its reputation. We know the migration is successful when data matches, workflows run, and users stop double-checking reports.”

Real-World Example: A Manufacturing Brand’s Smooth Transition

The client was a mid-sized parts manufacturer. Their ERP was 10 years old, and data was stored in Excel, SQL dumps, and local drives.

Generating reports was a slow, manual process that often took hours—and occasionally days to complete.

Uncanny’s Approach - What we did

We followed a tiered approach to data migration with the following steps:

- Complete data audit: Our team conducted a comprehensive data audit, with months of customer, vendor, and BOM tables sorted, labeled, and scored for reliability.

- Data cleanup: Following the audit, we performed cleanup by merging duplicate management records, correcting unit codes, and addressing naming inconsistencies.

- Scripts: Our team built small scripts to pull live files from each site, so nothing went stale while testing.

- Data mapping: Following the scripts, our team mapped all data to Odoo: customer master, item codes, BOM, and routing data.

- Fixing the fields: Where fields didn’t fit, we used transformation rules instead of patchy formulas. Every import was run through a trial load until the numbers and stock balances matched.

The Outcome

- Go-live happened in week four.

- Go-live happened in week four.

- Data validation achieved 99.8% accuracy across plants.

- The ERP rollout finished 40% faster than planned.

- Old monthly reports that once froze for hours now open in under a minute.

Client Note “We assumed three months minimum. Uncanny closed it in four weeks, without any complications.”

Ensure a Smooth ERP Migration with Uncanny’s Expertise

Migrating to a new ERP like Odoo is more than moving data—it’s about accuracy, operational continuity, and user adoption. From assessing legacy data and cleansing it to mapping, testing, and post-migration monitoring, every step impacts business performance and reporting reliability.

Uncanny’s Odoo data migration services guide businesses through this entire process, ensuring data is clean, correctly mapped, validated, and optimized for long-term success. With our expertise, organizations can avoid post-migration issues, speed up adoption, and focus on growth instead of data headaches.

Partnering with Uncanny means ERP migration is strategically planned, risk-free, and aligned with business objectives, turning a complex project into a seamless transformation.

Contact us today to learn how our experts can help your business transition smoothly, reduce risks, and maximize ROI.

FAQs

Q. Why does data migration matter in Odoo?

Data migration is crucial because failing to migrate your data can risk your operations. You can build the perfect setup, but if customer records or SKUs are not structured, operations may fall apart. Clean migration helps you with reliable operations.

Q. How long does migration usually take?

It depends on the existing data. Some projects finish in weeks; others stretch because old files need cleaning first. We’ve done four-week migrations that looked impossible at the start; the secret was prep work, not rushing imports.

Q. Do we really need to move all our old data?

No, and you shouldn’t. Keep what drives the business today: active customers, open invoices, and live stock. The rest can stay archived for reference. Lighter systems perform better, and no one needs a ten-year-old quote clogging reports.

Q. What’s the most common mistake during migration?

Leaving it to IT alone. Migration isn’t just data; it’s understanding what those numbers mean. Finance, sales, and warehouse teams must review their parts. When they don’t, confusion hits after go-live. Collaboration saves rework later.

Q. How does Uncanny keep migrations accurate?

We verify everything three times: the raw data, the business process, and how it works once live. If a figure or flow doesn’t match, it’s fixed before launch. That’s how we hit near-perfect accuracy without stopping operations.

About Author